What we learned Legacy

Thoughts of What we learned from Legacy

What is legacy in IT industry

Check out this video for podcasting: No matter what the tool is

-

Legacy technologies such as Informatica, SSIS, and SQL Server are often seen as outdated, but they are still widely used in the big large company (exclude IT company)

-

Because they had digital transformation quite early, and keep it works for a longtime.

- Pain-point of legacy:

- Maintenance, Support, and Vendor lock: developers, older tech, get helps, security risk due to out-date

- Integration Issue: Less support integration, data silos

- Flexibility Issue: Not design to handle business demands of today

- Performance Issue: unable to handle large amounts of data, not HA-DR

- Fact:

- Legacy systems often contain valuable data and business logic, therefore, it’s important to carefully plan any migration to ensure that this valuable information is preserved.

- MUST have solid migration strategies for modernizing legacy system: like Incremental Modernization, Rehosting, Replatforming, Replacing, Rearcitecting

Why is it Important to Understand Legacy Technologies?

- Widely used in many industries

- Provide a solid foundation for understanding newer technologies

Learning from Legacy Technologies

Informatica

ETL tool that is used to extract, transform, and load data. Learning Informatica involves understanding its architecture, components, and how to design ETL workflows.

<!-- Example of Informatica mapping -->

<MAPPING DESCRIPTION ="" ISVALID ="YES" NAME ="m_Customer" OBJECTVERSION ="1" VERSIONNUMBER ="1">

...

</MAPPING>

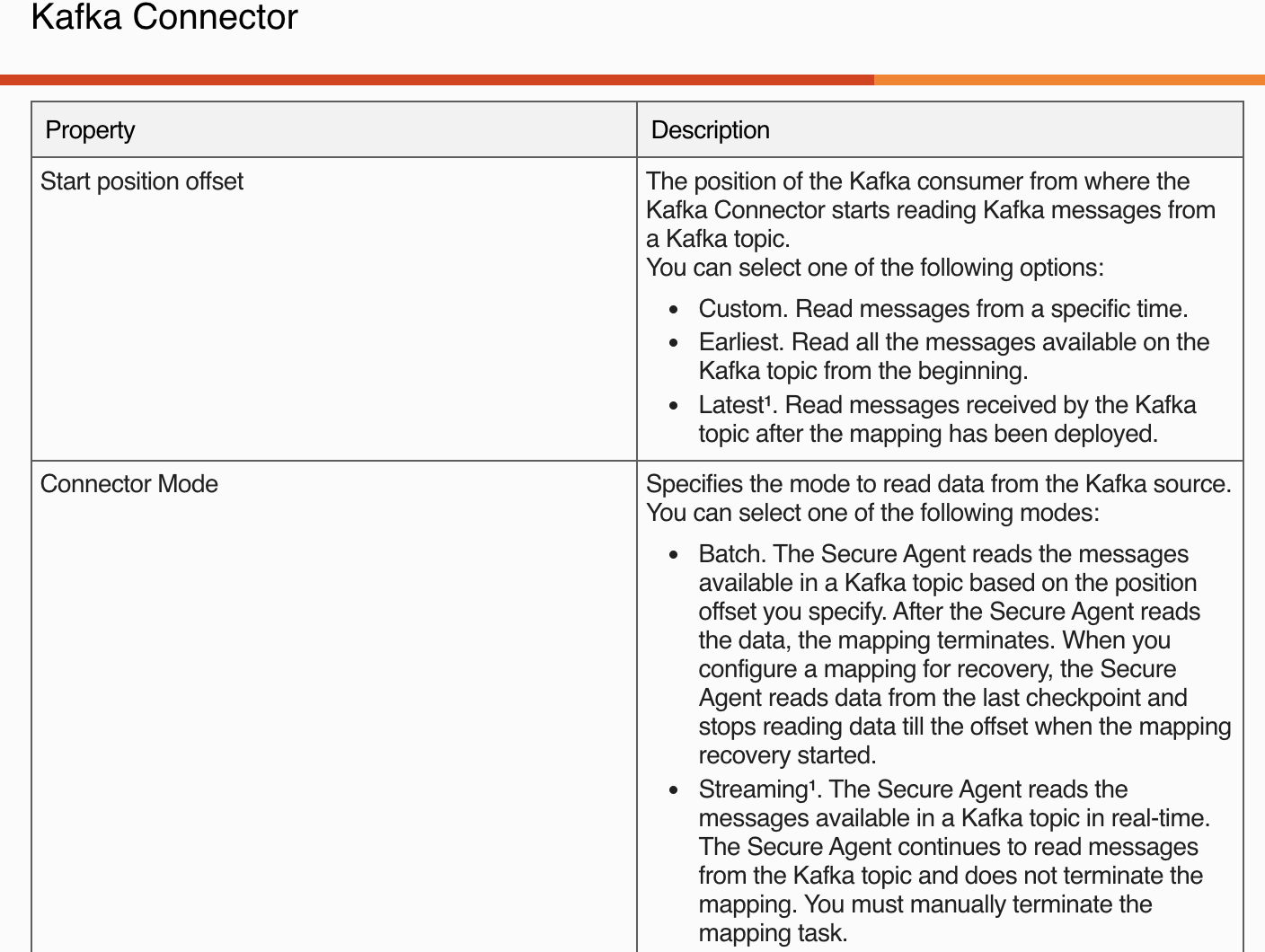

Example: Check Kafka Connection Properties

{ width=800px height=200px style=”border-radius: 10px; box-shadow: 0px 0px 10px black; font-weight: bold;” }

{ width=800px height=200px style=”border-radius: 10px; box-shadow: 0px 0px 10px black; font-weight: bold;” }

SSIS (SQL Server Integration Services)

- Platform for data integration and workflow applications.

- Building and integrating and processing data

SQL Server

-

SQL Server is a relational database management system. Learning SQL Server involves understanding SQL syntax, database design, and performance tuning.

-

Example of SQL Server query

SELECT TOP 100 * FROM Engineers WHERE Country = 'VIETNAM';

Learning from New Technologies

Azure Data Factory, BUT…

- A cloud-based data integration service that allows you to create data-driven workflows.

- Orchestrating and automating data movement and data transformation.

{

"name": "MyPipeline",

"properties": {

"activities": [

{

"name": "CopyActivity",

"type": "Copy",

"inputs": [

{

"referenceName": "<input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "BlobSource"

},

"sink": {

"type": "BlobSink"

}

}

}

]

}

}

Databricks, WITH …

- Unified analytics platform that accelerates innovation by unifying data science, engineering, and business

# Databricks notebook source

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder.appName("MyApp").getOrCreate()

# Load data

df = spark.read.format("csv").option("header", "true").load("/databricks-datasets/samples/data.csv")

# Perform some transformations

df_transformed = df.select("column1", "column2").filter(df["column1"] > 0)

# Show the result

df_transformed.show()

Deploying a Databricks Workspace

ARM template for deploying a Databricks workspace

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"workspaceName": {

"type": "string"

},

"location": {

"type": "string"

}

},

"resources": [

{

"type": "Microsoft.Databricks/workspaces",

"apiVersion": "2018-04-01",

"name": "[parameters('workspaceName')]",

"location": "[parameters('location')]",

"sku": {

"name": "standard"

},

"properties": {

"ManagedPrivateNetwork": "Disabled"

}

}

]

}

Deploying a Notebook

Using the Databricks REST API

import requests

# Databricks domain, token, and workspace path

domain = "<databricks-domain>"

token = "<databricks-token>"

workspace_path = "/Workspace/my-notebook"

# Notebook content

notebook_content = """

# Databricks notebook source

print("Hello, world!")

"""

# API endpoint

url = f"https://{domain}/api/2.0/workspace/import"

# Headers

headers = {

"Authorization": f"Bearer {token}",

"Content-Type": "application/json"

}

# Request body

body = {

"content": notebook_content.encode("base64"),

"path": workspace_path,

"language": "PYTHON",

"overwrite": True,

"format": "SOURCE"

}

# Send the request

response = requests.post(url, headers=headers, json=body)

# Check the response

if response.status_code == 200:

print("Notebook deployed successfully.")

else:

print("Failed to deploy notebook:", response.json())

Conclusion

-

Legacy technologies like Informatica, SSIS, and SQL Server can provide valuable learning opportunities.

-

By understanding and learning from these technologies, you can gain a deeper understanding of data management and ETL processes, which are still crucial in today’s data-driven world.

-

Building solid foundation knowledge and how the basis things work before jumping into other more “Easier”.

- I mentioned “Easier” because the improvement, revolution of tools, platform with less managed from end-users.

- You should check this and start from there, good reads Mordern Data Platform

Keep stay tuned for learning!